3 Tips for Debugging Cloud Scale Machine Learning Workloads

Let's say you built an amazingly wonderful hand-crafted artisanal convolutional neural network that works beautifully on your hard-drive based dataset. You are now ready to take this masterpiece to the cloud to work on a much larger dataset on beefier machines - you are not looking forward to it.

- "How do I ship this patchwork conda (or venv) where I've installed everything the training/inference code needed AS WELL AS everything else I thought I needed along the way?"

- "I don't want to waste cloud compute money on things I'm not sure will work on the first try!!"

- Basically, "I have already done the work and I'm not interested in the yak shaving portion of the job!"

No need to fear, dear reader, this article is designed to help you move your glorious work to the cloud (and beyond) by leveraging your local environment as if it were the cloud itself.

Moving to the Cloud

I'm familiar with Azure and their machine learning services so I will mostly focus on that technology. The principles, however, should be universal and include tips that should work elsewhere. The code is all available should you desire to peruse what I've built. It's basically a convolutional neural network to classify tacos and burritos (because who doesn't love good Mexican food?).

Tip 1 - Cloud Training (but locally)

The first step to moving your training to the cloud is describing the configuration of the the actual run. This includes what to run as well as how to run it. In the Azure Machine Learning service (AML for short) there's a number of fun ways to describe these things. Here I will focus primarily on the Python SDK and CLI. The first important concept is the ScriptRunConfig shown below:

def main():

# what to run

script = ScriptRunConfig(source_directory=".",

script="train.py",

arguments=[

"-d", "/data",

"-e", "1"])

# running the script

config = RunConfiguration()

# tie everything together

config.environment = create_env()

config.target = "local"

script.run_config = config

It basically marries the what (run train.py with -d /data -e 1) with the how (the RunConfiguration). The first essential part of the RunConfiguration is describing the python Environment:

def create_env(is_local=True):

# environment

env = Environment(name="foodai-pytorch")

env.python.conda_dependencies = dependencies()

# more here soon - this is the cool part

return env

Nothing super interesting here other than constructing the CondaDependencies:

def dependencies():

conda_dep = CondaDependencies()

conda_dep.add_conda_package("matplotlib")

conda_dep.add_pip_package("numpy")

conda_dep.add_pip_package("pillow")

conda_dep.add_pip_package("requests")

conda_dep.add_pip_package("torchvision")

conda_dep.add_pip_package("onnxruntime")

conda_dep.add_pip_package("azureml-defaults")

return conda_dep

Running this directly in an AML Workspace does not take too much more:

# get ref to AML Workspace

ws = Workspace.from_config()

# name the experiment (or cloud training run)

exp = Experiment(workspace=ws, name="foodai")

run = exp.submit(config=script)

run.wait_for_completion(show_output=True)

This (in theory) will run your amazing model in the cloud (modulo where it gets the data - I will leave this for another time)! This sometimes takes a while to run through and every mistake will add additional time to the whole process. It basically needs to copy your script, rebuild environments from scratch, load up the thing, run it, and FAIL. This happened to me a couple of times because I forgot matplotlib or didn't add requests or something else mundane (all my fault - although I never admitted it to the product team).

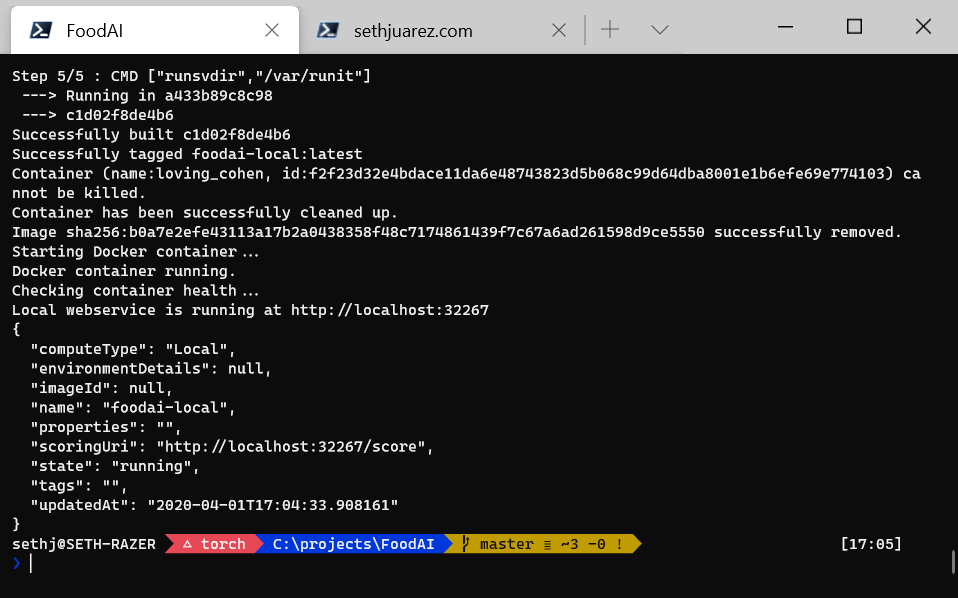

So how can I run this locally as if it were running on the cloud?? This is the 5 million currency-of-your-choice question. Turns out there's a fun feature that lets us set where the thing runs. Now if you don't know too much about docker, think of it as a mini stripped down machine with only the essential things built in to run just your training code. Turns out that the RunConfiguration built above is basically instructions on how to build a Docker image that then gets run as a container in the cloud. What if we could just run the container locally to make sure it works? Well you can:

def create_env(is_local=True):

# environment

env = Environment(name="foodai-pytorch")

env.python.conda_dependencies = dependencies()

# the GOOD PART WE LEFT OUT

# BUT ARE ADDING IN AGAIN

if is_local:

# local docker settings

env.docker.enabled = True

env.docker.shared_volumes = True

env.docker.arguments = [

"-v", "C:\\projects\\FoodAI\\data:/data"

]

return env

The part I like the most is where we tell the running container how its to access the data! Using env.docker.arguments = [ ... ] we basically tell the container running locally to mount my local data folder to /data and use that to train (if you know Docker, you can pass anything you want actually). Et voila!

You've basically run your machine learning experiment as if it were on the cloud (but locally using Docker). The good news is that if it all works well on you local (fake cloud) run then it should work wonderfully as an actual cloud run.

Tip 2 - Cloud Inference (but locally)

So you've built your amazing model, serialized it, and are ready to do some inference! Again, you likely already wrote the code to do that and you know it works locally. How do we get your masterpiece to work in the cloud? We will use the same principle as shown above: we will run it from a container as if it's in the cloud.

First things, first - AML expects scoring/inference to be done in a specific way. It basically needs an init() and a run() function: that's it! Here's mine (uses an onnx model):

import json

import time

import requests

import datetime

import numpy as np

from PIL import Image

from io import BytesIO

import onnxruntime as rt

from torchvision import transforms

# azureml imports

from azureml.core.model import Model

def init():

global session, transform, classes, input_name

try:

model_path = Model.get_model_path('foodai')

except:

model_path = 'model.onnx'

classes = ['burrito', 'tacos']

session = rt.InferenceSession(model_path)

input_name = session.get_inputs()[0].name

transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

def run(raw_data):

prev_time = time.time()

post = json.loads(raw_data)

image_url = post['image']

response = requests.get(image_url)

img = Image.open(BytesIO(response.content))

v = transform(img)

pred_onnx = session.run(None, {input_name: v.unsqueeze(0).numpy()})[0][0]

current_time = time.time()

inference_time = datetime.timedelta(seconds=current_time - prev_time)

predictions = {}

for i in range(len(classes)):

predictions[classes[i]] = str(pred_onnx[i])

payload = {

'time': str(inference_time.total_seconds()),

'prediction': classes[int(np.argmax(pred_onnx))],

'scores': predictions

}

return payload

You might be wondering about this: model_path = Model.get_model_path('foodai'). That would be an awesome question! AML has a feature where we can register and version our models. This score.py script simply loads the named model that was registered in the service (unless it can't). Once you have the scoring script and the registered model we can use the Azure ML CLI to deploy:

az ml model deploy --name foodai-local --model foodai:5 ^

--entry-script score.py --runtime python --conda-file foodai_scoring.yml ^

--compute-type local --port 32267 ^

--overwriteThe foodai_scoring.yml is a standard conda environment file. The beauty of this deploy, however, is that it all runs in a local container! The foodai:5 means version 5 of the foodai model registered in AML. Running that command starts the cloud service locally.

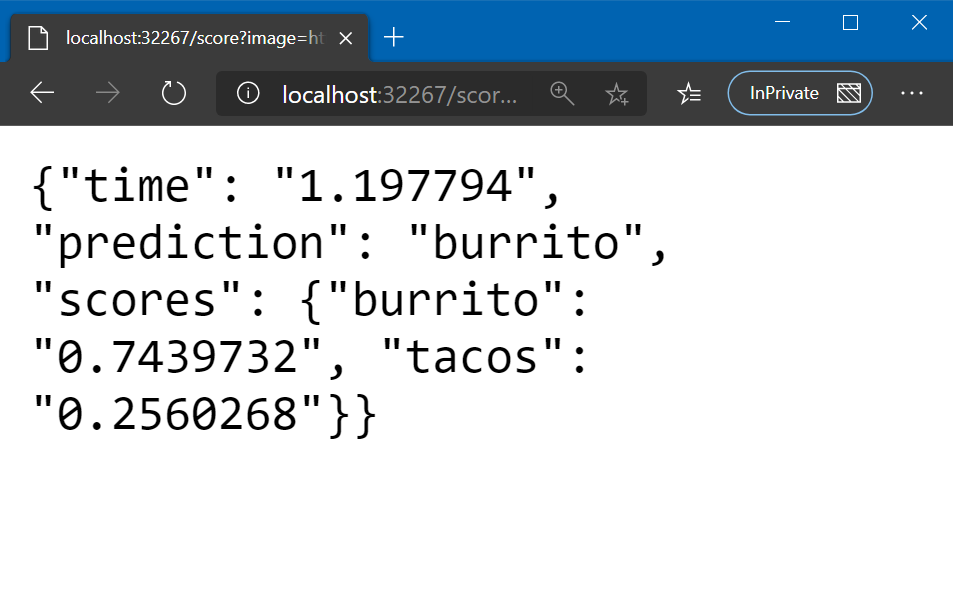

It works!

Again, the great news is that if it works locally, it should totally work on Azure! There's a lot of options on where on Azure you can deploy (but I will leave that for another time).

For reference, the CLI command for actually deploying to the cloud is very similar:

az ml model deploy --name foodai --model foodai:5 ^

--entry-script score.py --runtime python --conda-file foodai_scoring.yml ^

--deploy-config-file deployconfig.json --compute-target sauron ^

--overwriteThe only difference is the deployconfig.json file which describes the cloud target for deployment (common targets are AKS and ACI).

Tip 3 - Logs, Logs, Logs

Let's say we've got everything running well both locally as well as in containers that should run in the cloud and things still break! This brings us to our last tip: LOGS, LOGS, LOGS.

Experiment

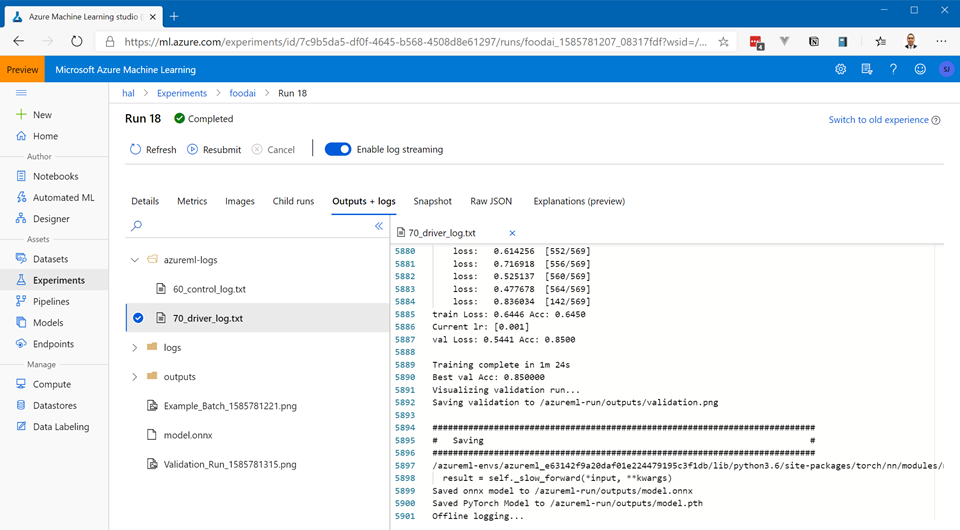

Any experiment run that happens produces output (you can see the output above in my local run). Turns out AML saves all of that!

Of all of the saved logs, 70_driver_log.txt is the one that stores the output of your training script. This is where you will find any additional issues once you move to the cloud (although if it runs locally in the AML context it should totally work in the cloud).

Inference

Getting logs from a deployed inference service is even easier:

az ml service get-logs --name foodaiwhere foodai is the name of the deployed service. If we pipe the output to a file we get something like:

[

"2020-03-31T18:35:33,706420083+00:00 - gunicorn/run

2020-03-31T18:35:33,706724585+00:00 - iot-server/run

2020-03-31T18:35:33,706480083+00:00 - rsyslog/run

... TRUNCATED ...

2020-03-31 18:35:35.153610654 [W:onnxruntime:, graph.cc:2413 CleanUnusedInitializers] Removing initializer '0.layer2.0.bn1.num_batches_tracked'. It is not used by any node and should be removed from the model.

Users's init has completed successfully

Scoring timeout setting is not found. Use default timeout: 3600000 ms

",

null

]Review

To review our three tips:

- We can run our machine learning experiments in a cloud context using Docker (without having to know too much about Docker)

- We can run our inference services in a cloud context using Docker (without having to know anything about Docker)

- We can view rich logs for both experiments and inference services.

Reference

- Food AI GitHub Project

- Local Experiment Code

- Azure Machine Learning service

- ScriptRunConfig

- RunConfiguration

- Environment

- CondaDependencies

- Scoring File for Inference

- Registering and Versioning Models

- Azure ML CLI

- Inference Service Deployment

- Azure Kubernetes Service Deployment

- Azure Container Iinstance Deployment

Your Thoughts?

- Does it make sense?

- Did it help you solve a problem?

- Were you looking for something else?